Research Directions

Our current research is focused on enabling situated multi-party human-robot interactions. In general, our work combines elements from machine learning, artificial intelligence, social psychology, and design.

Social Group Phenomena

We study group phenomena that is typical of human social encounters in the context of Human-Robot Interaction (HRI). Our hypothesis is that by studying group phenomena in HRI, we will be able to devise mechanisms to help robots cope with the complexity of multi-party interactions.

Our work in this direction has validated the idea that face formations, or F-Formations as coined by A. Kendon, emerge in Human-Robot Interaction [HRI, 2014; HRI LBR, 2015]. We have also proposed methods for studying group interactions with robots [CSCW WS, 2017], and studied social influence in HRI [CTS, 2011; HRI, 2018]. We are particularly excited about the idea of prosocial robotics: robots that help people even if it is costly to them; or robots that motivate people to help other agents [HRI, 2020].

Social Perception

Our research investigates fundamental principles and algorithms to enable autonomous perception of human behavior, group social phenomena, and the social context of human-robot interactions. For example, we have worked on enabling robots to identify spatial patterns of behavior that are typical of social group conversations [IROS, 2015; CSCW, 2020]. We are interested in developing methods to enable situated, interactive agents to reason about verbal [EMNLP, 2018] and non-verbal human behavior [HRI, 2017].

Autonomous Social Robot Behavior

We believe that machine learning is a key enabler for autonomous, social robot behavior in unstructured human environments. Thus, we explore using data-driven learning methods, often in combination with model-based approaches, to enable robots to act in social settings. For example, we have used Reinforcement Learning for generating non-verbal robot behavior during group conversations [RO-MAN, 2016]. We have also explored imitation learning for enabling robot navigation in human environments [ICRA, 2019; RSS, 2019].

Current Projects

-

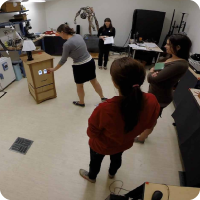

Spatial Patterns of Behavior in HRI Under Environmental Spatial Constraints

This project aims to advance autonomous reasoning about spatial patterns of group behavior during human-robot conversations. It provides the empirical knowledge and methods needed to incorporate spatial constraints into the way robots reason about human (and robot) spatial formations. This project is funded by the National Science Foundation. Read more.. -

Shutter, the Robot Photographer

This project aims to investigate human-robot interactions in the wild with a robot photographer. The photography application serves to study fundamental questions in HRI, including: what kind of behaviors are suitable for initiating situated social encounters?; and how can robots adapt to changes in their social context? Read more.. -

Group Social Influence

This project investigates group social influence in Human-Robot Interaction. How can robots shape group dynamics? What underlying factors motivate changes in human behavior as a result of specific perspectives taken by a subgroup of a multi-party human-robot interaction? -

Non-Verbal Robot Behavior Coordination

This project studies the effects of multi-modal robot behaviors, e.g., how do people perceive coordinated gaze and head motions in group human-robot interactions? We want to understand how to better design and implement these types of behaviors for situated social encounters. -

Robot Navigation in Human Environments

This project explores the possibilities of enabling complex robot navigation in human environments. We explore two types of approaches: methods that combine planning on traditional metric maps with learned navigation policies; and behavioral navigation methods that do not use explicit metric representations. Rencelty, we developed the Social Environment for Autonomous Navigation (SEAN) to facilitate early development of navigation systems and benchmarking. SEAN was supported by a 2019 Amazon Research Award.