Spatial Patterns of Behavior in HRI Under Environmental Spatial Constraints

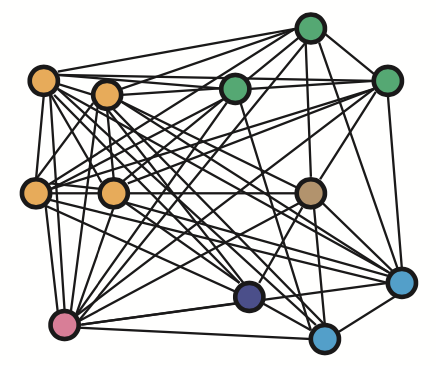

Robots need the ability to recognize social group conversations to effectively adapt their behavior to different social contexts in dynamic environments. One way of enabling them with this ability is by providing them with methods to identify spatial patterns of human behavior that typically emerge during social conversations. These spatial patterns are often observed as face-to-face, side-by-side or circular spatial arrangements; however, the specific type of arrangement that emerges ultimately depends on many social factors including environmental spatial constraints.

This project provides the empirical knowledge and methods needed to incorporate spatial constraints into the way robots reason about human (and robot) spatial formations. In particular, we focus on studying:

1) how do spatial constraints influence conversational group formations in HRI?;

2) how can robots detect these formations under spatial constraints?; and

3) how can they autonomously generate appropriate spatial behavior to sustain conversations in spatially constrained environments?

Publications

M. Swofford, J. Peruzzi, N. Tsoi, S. Thompson, R. Martín-Martín, S. Savarese, M. Vázquez. Improving Social Awareness Through DANTE: Deep Affinity Network for Clustering Conversational Interactants. Proc. ACM Hum.-Comput. Interact. 4, CSCW1, 2020.

M. Swofford, J. Peruzzi, N. Tsoi, S. Thompson, R. Martín-Martín, S. Savarese, M. Vázquez. Improving Social Awareness Through DANTE: Deep Affinity Network for Clustering Conversational Interactants. Proc. ACM Hum.-Comput. Interact. 4, CSCW1, 2020.[project website] [arXiv version]

J. Connolly, N. Tsoi and M. Vázquez. Perceptions of Conversational Group Membership based on Robots’ Spatial Positioning: Effects of Embodiment. Companion of the 2021 ACM/IEEE Int'l Conf. on Human-Robot Interaction, 2021.

J. Connolly, N. Tsoi and M. Vázquez. Perceptions of Conversational Group Membership based on Robots’ Spatial Positioning: Effects of Embodiment. Companion of the 2021 ACM/IEEE Int'l Conf. on Human-Robot Interaction, 2021.[paper] [code]

N. Tsoi, J. Connolly, E. Adénı́ran, A. Hansen, K. T. Pineda, T. Adamson, S. Thompson, R. Ramnauth, M. Vázquez, and B. Scassellati. Challenges Deploying Robots During a Pandemic: An Effort to Fight Social Isolation Among Children. Proc. of the 2021 ACM/IEEE Int'l Conf. on Human-Robot Interaction, 2021.

N. Tsoi, J. Connolly, E. Adénı́ran, A. Hansen, K. T. Pineda, T. Adamson, S. Thompson, R. Ramnauth, M. Vázquez, and B. Scassellati. Challenges Deploying Robots During a Pandemic: An Effort to Fight Social Isolation Among Children. Proc. of the 2021 ACM/IEEE Int'l Conf. on Human-Robot Interaction, 2021.[project website] [paper]

S. Thompson*, A. Gupta*, A. W. Gupta, A. Chen and M. Vázquez (* denotes equal contribution). Conversational Group Detection with Graph Neural Networks. Proc. of the 23rd ACM International Conference on Multimodal Interaction (ICMI), 2021.

S. Thompson*, A. Gupta*, A. W. Gupta, A. Chen and M. Vázquez (* denotes equal contribution). Conversational Group Detection with Graph Neural Networks. Proc. of the 23rd ACM International Conference on Multimodal Interaction (ICMI), 2021.[paper] [code]

M. Vázquez, A. Lew, E. Gorevoy, and J. Connolly. Pose Generation for Social Robots in Conversational Group Formations. Frontiers in Robotics and AI, vol. 8, 2022.

M. Vázquez, A. Lew, E. Gorevoy, and J. Connolly. Pose Generation for Social Robots in Conversational Group Formations. Frontiers in Robotics and AI, vol. 8, 2022.[paper] [supplementary] [demo] [code]

N. Tsoi, K. Candon, D. Li, Y. Milkessa, and Vázquez, M. Bridging the Gap: Unifying the Training and Evaluation of Neural Network Binary Classifiers. Advances in Neural Information Processing Systems, 2022.

N. Tsoi, K. Candon, D. Li, Y. Milkessa, and Vázquez, M. Bridging the Gap: Unifying the Training and Evaluation of Neural Network Binary Classifiers. Advances in Neural Information Processing Systems, 2022.[paper] [tutorial] [code]

Acknowledgements

This material is based upon work supported by the National Science Foundation under Grant No. (IIS-1924802). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

This material is based upon work supported by the National Science Foundation under Grant No. (IIS-1924802). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.