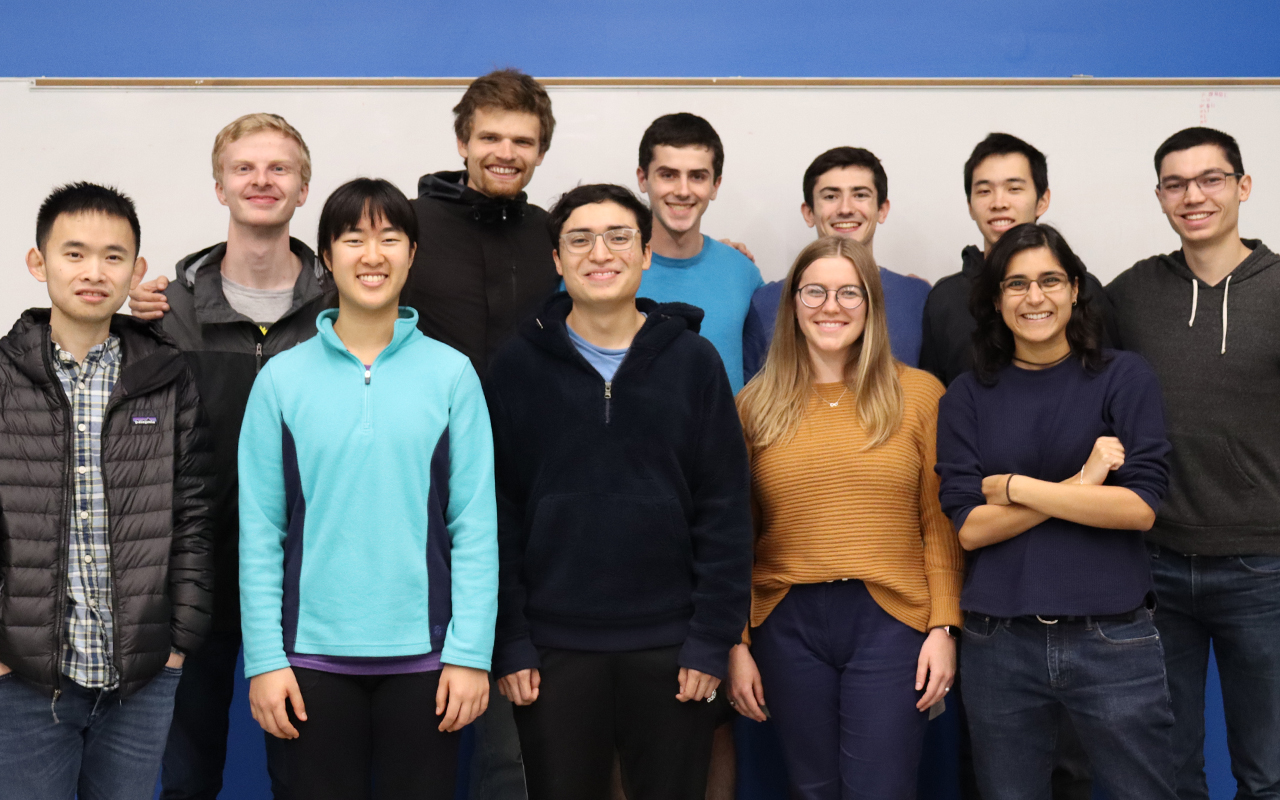

Welcome to the website of the Interactive Machines Group (IMG)!

IMG is an interdisciplinary research group led by Marynel Vázquez in Yale's Computer Science Department.

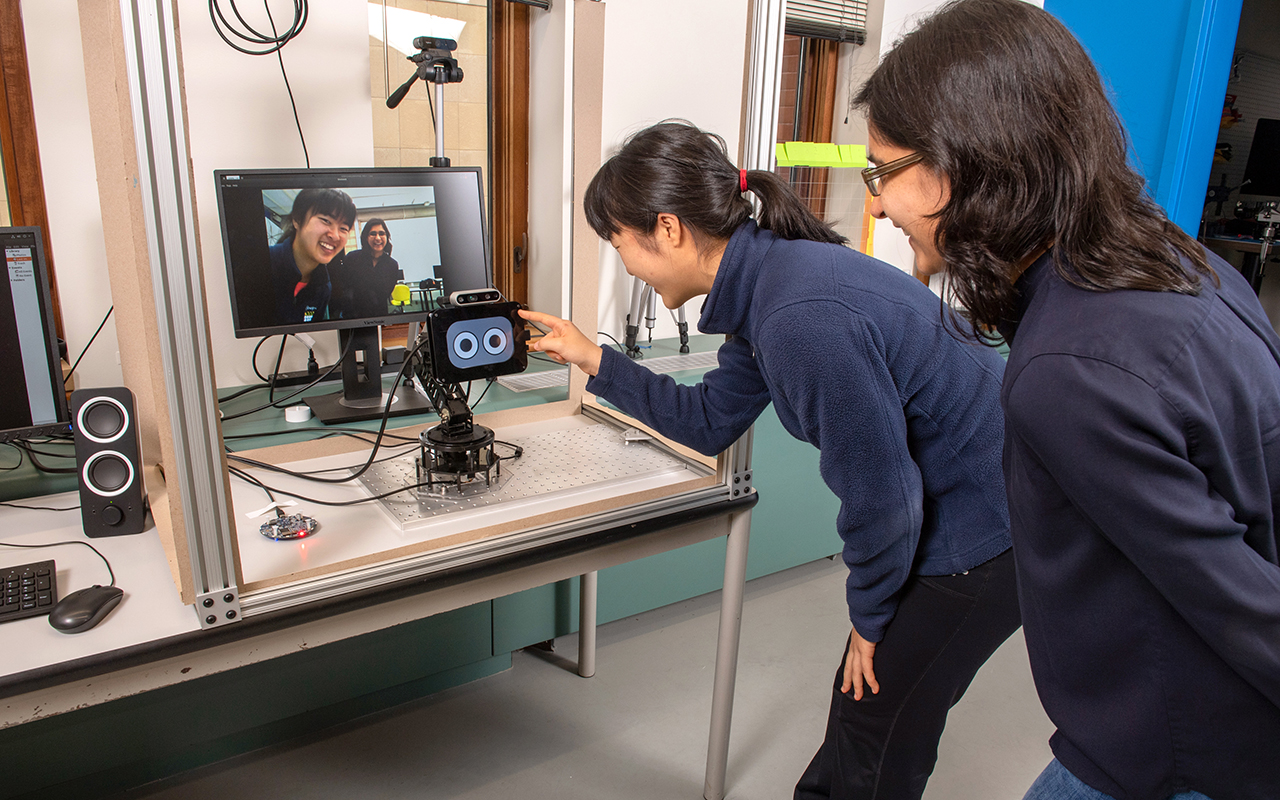

Our group studies fundamental problems in Human-Robot Interaction, which often results in exploring research directions that can advance Human-Computer Interaction, Robotics and applied Machine Learning more broadly. In particular, our current research agenda is focused on creating a new generation of robots that can effectively adapt to varied social contexts, engaging and sustaining interactions with multiple users in dynamic human environments like our university campus or museums. We spend our day-to-day building novel computational tools, prototyping interactive systems, and running user experiments to both better understand interactions with technology and validate our methods. More information about current research directions can be found in the research page.

Want to interact with robots? Information about ongoing studies can be found in the studies page.

News

-

IMG members travel to London

Kate Candon and Sydney Thompson presented their research at AAMAS'23, and Marynel Vázquez presented at two ICRA'23 workshops. -

IMG participates in the AAAI 2023 Spring Symposium Series

Nathan Tsoi and Marynel Vázquez coauthored a paper with many collaborators about Benchmarking Social Robot Navigation at the 2023 AAAI Spring Symposium Series. This paper was nominated to the Best Paper Award at the Symposium about HRI in Academia and Industry! -

Paper about Transparent Matrix Overlays wins Best Technical Paper at HRI'23!

Congratulations to Jake Brawer and the other authors of 'Interactive Policy Shaping for Human-Robot Collaboration'. -

HRI'23: Three new full papers, one demo, and workshop presentations!

IMG participates in many ways at the 2023 ACM/IEEE International Conference in Human-Robot Interaction -

IMG participates in HAI'22 and CORL'22

Kate Candon, Debasmita Ghose and Sarah Gillet will be presenting new work in New Zealand! Their presentations will be about how people perceive helping behaviors from an agent, how robots can learn visual object representations tailored to human requirements, and how a robot can learn who to address during group interactions. -

New NeurIPS 2022 publication

IMG students develop novel approach to approximate metrics based on confusion matrix values (like the F-β score) and use them to train binary neural network classifiers. The approach works particularly well with imbalanced datasets, as shown in a new NeurIPS paper.

Lab Location

Yale University, AKW 400

51 Prospect Street

New Haven, CT 06511